Analytical Data Quality Review: Verification, Validation, and Usability

ITRC has developed a series of fact sheets that summarizes the latest science, engineering, and technologies regarding environmental data management (EDM) best practices. This fact sheet describes:

- the importance of verification in relation to data quality

- what makes validation of analytical chemistry data different from other types of validation tasks

- how verification and validation are used to assess overall data quality and usability

- various federal and state resources related to data quality review

1. INTRODUCTION

Some degree of data review should be performed on all data that is used for decision-making. How extensive that review needs to be depends on the intended use of the data and any regulatory requirements.

A clear understanding of the data needs and expectations is crucial. Establishing data quality objectives (DQOs) and requirements to be met before data is used in project decisions, evaluations, and conclusions is essential to managing defensible environmental data. For some projects, these DQOs are formally developed in various planning documents, such as quality assurance project plans (QAPPs), where data quality indicators of precision, accuracy/bias, representativeness, comparability, completeness, and sensitivity (PARCCS) are well defined. Less formally, DQOs might be established in various data management standard operating procedures (SOPs) or documented best practices.

This document will discuss standard terminology and concepts associated with analytical data quality review and its use in assessing overall data quality and usability

2. DEFINITIONS AND OVERVIEW

2.1 Verification

The USEPA Guidance on Environmental Data Verification and Data Validation (USEPA 2002) defines verification as “the process of evaluating the completeness, correctness, and conformance/compliance of a specific data set against the method, procedural, or contractual requirements.” This definition focuses on environmental analytical data and would include activities such as reviewing sample chains of custody (COC), comparing data in electronic data deliverables (EDDs) to paper or electronic laboratory reports, and reviewing laboratory data packages against project PARCCS criteria.

While the focus of this document is on analytical data review, all environmental data collection efforts will have some verification needs. This verification could include review of metadata, proper sample naming conventions, location data, lithology, water levels, field observations, or keyword tags on media files, for example. This type of verification is crucial for assessing overall data quality. The Using Data Quality Dimensions to Assess and Manage Data Quality subtopic sheet provides a table of key considerations for assessing the data quality dimensions of integrity, unambiguity, consistency, completeness, and correctness for most types of environmental project data, including analytical and non-analytical data, throughout the project lifecycle.

Data verification needs will vary depending on program/project DQOs and regulatory requirements, but all data collection activities should have a standard, and preferably documented, verification process. Verification tasks should be assigned to an individual familiar with the data being verified and ideally performed as soon as possible to identify errors while there may still be opportunities to correct and improve the overall quality of the data.

If laboratory QC data is provided in an EDD with the sample data, it can be valuable to perform a data quality screening using an automated review tool during the verification process. These tools utilize user-defined PARCCS criteria and/or historical data to provide quick, reliable, consistent review of data sets. These screening tools can help identify outliers, flag potentially unusable data, or in the case of trend charts (USEPA, 2011), even show changes over time.

2.2 Validation

Validation, in the context of environmental analytical data quality, is a formal analyte/sample specific review process that extends beyond verification to determine the analytical quality of a specific data set (USEPA 2002). Due to variability in field collection and laboratory analysis, it is highly unlikely that analytical data collected for any regulatory program is going to always meet established PARCCS criteria. Verification can determine if your data met various data quality indicators, but validation goes a step further in determining (and documenting) how failure to meet method, procedural, or contractual requirements impacts the quality of the associated data.

While verification, if performed promptly, may provide opportunities for improving data quality, validation in the above context cannot change the quality of an analytical data set, it only defines it. In other words, validation cannot turn low quality data into high quality data, but rather identifies areas where data may lack the quality needed for a project DQO and/or regulatory standard.

It is important to acknowledge that the term “validation” is a general term that can have different meanings depending on the industry and can even have different definitions within the environmental data community. Software engineers, database developers, GIS professionals, statisticians, analytical laboratory personnel, and environmental chemistry validators might all perform validation on data in some capacity. In some work environments, a project team could include all of these individuals, and miscommunication becomes more likely. In very general terms, “validation” is the process of ensuring accuracy and quality of data. In practice, how each group of people performs validation, and the goals of that validation, can differ. It is extremely important when different groups are interacting with each other that everyone understands what type of validation is being discussed, who will be performing the task, and what the goals of the validation task are.

For the context of this document, we are discussing validation of analytical data performed by an environmental chemistry validator after the receipt of a final laboratory data package and before final data analysis. When validation status and/or review level is recorded within an environmental data management system (EDMS), those entries are typically related to this specific analytical data validation process, since analytical chemistry validation is often a component of regulatory compliance. Other types of validation may be performed on the same data before (such as validation performed at the analytical laboratory) or could be performed after analytical chemistry validation (during statistical analysis), but this document is narrow in focus. For the remainder of this fact sheet, we will refrain from using the term “validation” outside of the specific meaning defined for

Documenting Quality of Non-Analytical Data

Analytical data typically has well defined data quality objectives that can be easily measured and a defined system of documenting quality using validation qualifiers. When these qualifiers are stored within an EDMS, they are easily accessible to all data users alongside their associated data. In contrast, documenting the quality of non-analytical data is often less defined within the environmental protection industry. If information on the quality of non-analytical data is stored within an EDMS, it is much more likely to vary between organizations. It is possible that information related to quality for non-analytical data is only stored in metadata or other supporting project documents outside of the EDMS, and data users may or may not know that it exists or where to find the supplemental information. While developing a standardized process for documenting the quality of non-analytical environmental chemistry data is beyond the scope of these documents, the ITRC EDM Best Practices Team recognizes this as an area for improvement within the industry.

analytical chemistry data at the beginning of this section, and instead use “verification” or the generic term “review” for all other data review activities.

2.3 Overall Program / Project Data Quality

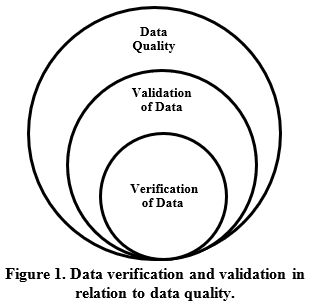

Verification is the first step in determining overall data quality and compliance with DQOs and any defined PARCCS criteria. Validation builds off verification and helps define analytical data quality through the assignment of validation qualifiers. You can omit formal validation from the overall data quality review process, but the analytical quality of the data will be less defined and could add additional time and effort to the overall data quality assessment. As shown in Figure 1, verification and validation are important steps toward assessing data quality, but do not form a complete picture of overall data quality alone. All data quality dimensions (see Using Data Quality Dimensions to Assess and Manage Data Quality subtopic sheet), including the quality of supporting non-analytical data, should be considered before data are used for final reporting or decision-making. For example, if analytical data are assigned to an incorrect sample location in a data table or figure, the quality of analytical data becomes largely irrelevant. Even small data errors or omissions can have drastic impacts on overall data quality.

2.4 Data Usability

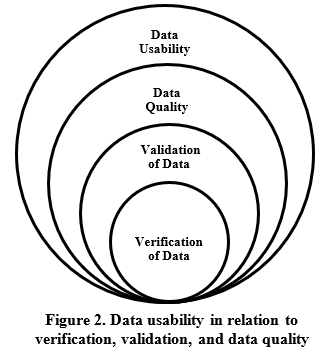

Data usability is determined by the project team after verification, validation, or any other data quality review is complete, and the overall quality of the collected data is known. Simply, a data usability assessment determines whether or not the quality of the analytical data is fit for its intended use. As shown in Figure 2, verification, validation, and overall data quality are an important part of determining data usability, but overall program or project data usability considers additional factors beyond just data quality alone. If data have not been validated, those performing a usability assessment will need to refer to any other available data quality review findings and/or perform a more thorough review of the laboratory reports and laboratory flags to assess PARCCS parameters and their impacts on the overall data quality. While verification and validation activities can be a significant expense in an overall program or project budget, these tasks helpstreamline the final usability assessment and guard against even more costly surprises during final reporting and reduce the likelihood of misinterpretation. Additional details on using validation qualifiers during a usability assessment are provided in Section 3.3.3.

The ITRC Incremental Sampling Methodology guidance provides additional details on performing a data usability assessment, and breaks the process down into four main steps:

- Review project objectives and sampling design.

- Review the verification/validation outputs and evaluate conformance to performance criteria.

- Document data usability, update the conceptual site model, apply decision rules, and draw conclusions.

- Document lessons learned and make recommendations.

Usability assessments will vary greatly depending on the project and the established DQOs. ITRC provides additional guidance related to the following:

- Incremental sampling methodology (ISM): Data Quality Evaluation

- Per- and polyfluoroalkyl substances (PFAS): Section 11.3 Data Evaluation

- 1,4-dioxane: Section 4.3 1,4-Dioxane Data Evaluation

3. PERFORMING ANALYTICAL DATA QUALITY REVIEWS

This section provides additional details related to planning, performing, and using analytical data quality reviews as part of a program or project’s overall data quality assessment.

3.1 Third-Party Data Review

Analytical data quality reviews can be performed by third-party firms or “in-house.” Third-party data review is when the review is performed by an organization that is not involved in the planning, sampling, or final reporting of the data. Third-party review provides a level of impartiality since the reviewer is not directly tied to potential consequences of identifying data quality deficiencies, and it is sometimes required by a regulator or preferred over internal methods for a variety of time, cost, and liability reasons. When a third party is used, it is essential that they be provided with all necessary project information to perform the requested review, including related planning documents, the appropriate type of laboratory report, any quality-related field data, and direction on appropriate review guidance to use.

3.2 Stages of Analytical Data Quality Reviews

The analytical data quality review process has the potential to be complicated at the highest level, and many terms exist to distinguish different types of review. Not all regulators may require “validation” as defined by this document but might have an alternative formal analytical data quality review process in place. Section 4 of this document includes a list of commonly used federal and state data review guidance documents.

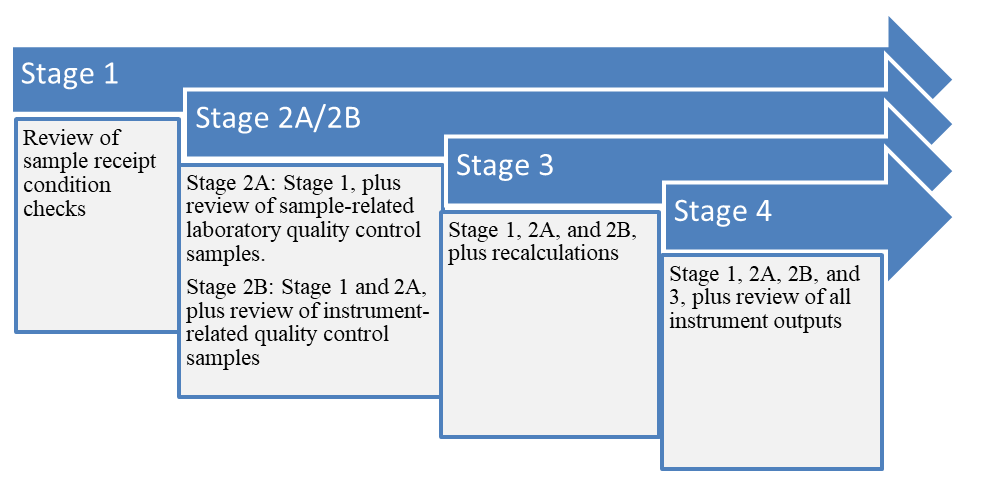

The complexity of data review can generally be broken up into categories, levels, tiers, or stages. For simplicity, stages will be the default term used in this document, with the understanding that other terms are used by different state or federal agencies. As illustrated in Figure 3, each successive type of review usually builds upon the previous. For example, the USEPA Guidance for Labeling Externally Validated Laboratory Analytical Data for Superfund Use (USEPA 2009) breaks the data quality review process down into four main stages: Stage 1, Stage 2A/2B, Stage 3, and Stage 4. Stage 1 is the least complex review and Stage 4 is the most complex. Higher stages of review include everything reviewed under the lower stages.

Figure 3. Example of USEPA stages showing increasing data review complexity.

From a practical standpoint, the stage of data review performed will be dependent on the program/project DQOs and the type of laboratory report deliverable available. For example, a Stage 3 review, where results are recalculated, cannot be performed without a laboratory data package that includes raw instrument data. It is important to note that the analytical laboratory could have similar tier/level/stage/category terminology for their deliverables, which may or may not correspond to data review stage required. The stage of review needed may also be influenced by other situational factors, such as litigation liability, budget, and resource limitations. As the complexity of the review increases, the uncertainty of the data decreases. It is up to the project team during planning to ensure that an appropriate review stage is identified and that the requested laboratory deliverables contain all the data needed for the data reviewer to perform the stage of review needed. Additionally, if only a subset of data is to be reviewed at any stage, care should be taken to select an appropriate representative data set. If performed, results from an automated data quality screening during verification can be a useful tool to help target data sets for review.

3.3 Applying Validation Qualifiers

When data quality issues are assessed during analytical chemistry validation, a validator will typically assign a validation qualifier that defines the quality of the impacted analytical result. The validator can also decide that a verified exceedance does not impact the overall quality of the associated result and no qualifier will be assigned. Validation qualifiers can be project- or program-specific and ideally should be clearly defined in project planning documents.

3.3.1 Validation Qualifiers and Laboratory Data Flags

Common Validation Qualifiers

(adapted from EPA National Functional Guidelines, 2020)

-

U—The material was analyzed for but was not detected above the level of the associated value. The associated value is either the sample quantitation limit or the sample detection limit.

-

UJ—The material was analyzed for but was not detected. The level at which the material is not detected is an estimate and may be inaccurate or imprecise.

-

J—The analyte was positively identified; the associated numerical value is the approximate concentration of the analyte in the sample. (may also include a bias indicator)

-

R*—The data are unusable. The sample results are rejected due to serious deficiencies in meeting QC criteria. The analyte may or may not be present in the sample.

*Not all regulators mark data as unusable during validation.

Validation qualifiers should not be confused with laboratory data flags (often referred to as laboratory qualifiers). Some laboratory data flags can be similar to validation qualifiers and might denote or imply quality; however, many laboratory data flags are strictly informational in nature. Additionally, laboratory data flags can be highly variable between laboratories.

In many cases, the overall quality of a result cannot be determined from laboratory flags alone. For example, take the situation where the laboratory has assigned an “M” flag to a result denoting that the associated matrix spike recovery was outside the laboratory’s established acceptable recovery range. The validator assesses the “M” flag and will determine if the associated result should be qualified as estimated (J), rejected (R), or have no qualifier at all based on potential bias, the severity of the exceedance, and other quality control findings. In this scenario, the laboratory documented a criteria failure, the verification process would have confirmed that the laboratory properly documented the criteria failure, and the validation process then determined how that criteria failure impacted the quality of the result.

Additionally, laboratory flags typically do not take into account field observations and field quality control samples such as field blanks and field duplicates. In the case of blind field duplicates, the laboratory has no knowledge of the associated parent sample and has no way to assess the precision of the results. Relying solely on laboratory flags to assess PARCCS compliance could overlook important data quality indicators.

Validation qualifiers can either replace or combine with laboratory data flags depending on the project or program requirements. A validator can also agree with an assigned laboratory flag and retain the laboratory flag as a final qualifier without modification. It is good practice to record laboratory flags and validation qualifiers separately so it is clear whether the final data qualifier was assigned by the laboratory or the validator.

It is worth noting that the rejection of data during validation is not universal. The U.S. DOD General Data Validation Guidelines (DOD 2019) document, for example, uses an interim “X” qualifier (exclusion of data recommended) instead of an “R” qualifier during validation, with only the project team assigning either an “R” (rejected) or “J” (estimation) final qualifier as appropriate during the usability assessment. The sometimes-blurred line between documenting quality during validation and determining usability is another reason why the approach for assigning appropriate qualifiers during validation should be decided, documented, and communicated prior to any validation being performed.

3.3.1.1 Qualifier Reason Codes

Since validation qualifiers usually denote a general overall quality of the result, and there may be more than one reason a result is qualified, reason codes can provide additional information in these situations. Reason codes provide additional details without having to read the laboratory or validation report, while keeping validation qualifiers concise, consistent, and easy to interpret for a data user. For example, a validator has qualified a benzene result as estimated (J). The data user knows this result should be considered an estimate but does not know why the result is estimated unless they refer to the validation report. If using reason codes, the validator would apply the same estimated (J) qualifier, but also apply reason codes, “MS1” and “FD1” for example, to indicate that this result had a matrix spike percent recovery and a field duplicate relative percent difference that exceeded the project’s established criteria. While one data user might only need the (J) qualifier, a different data user could use the reason codes for identifying trends in quality control issues or refining quality assurance procedures. If reason codes are being used, a list of acceptable reason codes and their definitions should be well documented in planning documents and any other document where they are displayed. It is recommended that reason codes be displayed with, but clearly separated from, validation qualifiers if reported.

3.3.2 Managing and Reporting Qualified Data

Validation qualifiers, and reason codes if used, should be treated as part of the analytical result and stored with the result in an EDMS. Validation qualifiers and reason codes should be unique valid values with a single documented definition. Care should be taken when adding validation qualifiers and reason codes into any EDMS. This is not always a simple data entry task, and there may be instances where a result is assigned multiple validation qualifiers, and an assessment of a final qualifier needs to be made based on established hierarchy rules. Ideally, the validator, or a well-trained designee, should be responsible for assigning a final qualifier and appropriate reason codes for each analytical result to avoid misinterpretations. This can be done via a data table, EDD, or both.

3.3.3 Using Validation Qualifiers during a Usability Assessment

If analytical data has been validated, anyone performing a data usability assessment should be familiar with validation qualifiers and their meanings and use the assigned qualifiers to guide their final assessment of the data. Below are a few basic examples of using qualified validated data during a usability assessment, with the understanding that actual scenarios will vary.

Data that have been qualified as rejected/unusable during validation should not be used for decision-making purposes. In cases where data is not rejected during validation, and only recommended for exclusion, the project team should review all available information to decide whether the data should be used for the intended purpose, and apply final appropriate qualifiers.

For estimated data, the project team should consider any potential bias of the qualified result. High or low biased results may not be appropriate for use in determining background level concentrations. Estimated results near project action levels could present unacceptable uncertainty when determining regulatory compliance and might need additional lines of evidence or historical data to support the usability of a result. Results qualified with high or low bias might also provide additional lines of evidence when outliers are identified during statistical analysis.

Data qualified as “U” (non-detect) during validation, typically due to laboratory or field blank contamination, could result in elevated reporting limits, resulting in a decrease in measurement sensitivity. Reporting limits of all “U” qualified data should be evaluated against required reporting limits and action levels before drawing conclusions.

4. DATA QUALITY REVIEW AND USABILITY GUIDANCE

Many regulators have published guidance related to data quality and data usability. It is important to establish what guidelines should and will be used during the project planning stage. Table 1 is a list of commonly used federal and state documents.

Table 1. Links to government validation, data quality, and data usability documents

| Government Organization | Reference |

| Federal Guidance | |

| U.S. Department of Defense (DOD) | General Data Validation Guidelines https://denix.osd.mil/edqw/documents/documents/gen-data-validation-rev1/ |

| Data Validation Guidelines Module 1: Data Validation Procedure for Organic Analysis by GC/MS https://denix.osd.mil/edqw/documents/documents/module-1/ |

|

| Data Validation Guidelines Module 2: Data Validation Procedure for Metals by ICP-OES https://denix.osd.mil/edqw/documents/documents/module-2/ |

|

| Data Validation Guidelines Module 3: Data Validation Procedure for Per- and Polyfluoroalkyl Substances Analysis by QSM Table B-15 https://denix.osd.mil/edqw/documents/documents/module-3-data-validation/ | |

| Data Validation Guidelines Module 4: Data Validation Procedure for Organic Analysis by GC https://denix.osd.mil/edqw/documents/documents/module-4-data-val/ |

|

| U.S. Environmental Protection Agency (USEPA) | Superfund CLP National Functional Guidelines (NFGs) for Data Review https://www.epa.gov/clp/superfund-clp-national-functional-guidelines-nfgs-data-review |

| Guidance for Labeling Externally Validated Laboratory Analytical Data for Superfund Use | |

| Guidance on Environmental Data Verification and Data Validation https://www.epa.gov/quality/guidance-environmental-data-verification-and-data-validation | |

| Regional Guidance | |

| USEPA Region 1 | Region 1—EPA New England Environmental Data Review Program Guidance https://www.epa.gov/sites/default/files/2018-06/documents/r1-dr-program-guidance-june-2018.pdf |

| USEPA Region 2 | Data Validation Active Standard Operating Procedures (SOPs) https://www.epa.gov/quality/region-2-quality-assurance-guidance-and-standard-operating-procedures |

| USEPA Region 3 | EPA Region 3 Data Validation https://www.epa.gov/quality/epa-region-3-data-validation |

| USEPA Region 4 | EPA Region 4 Data Validation Standard Operating Procedures for Contract Laboratory Program Routine Analytical Services https://www.epa.gov/quality/epa-region-4-data-validation-standard-operating-procedures-contract-laboratory-program |

| USEPA Region 9 | Data Validation & Laboratory Quality Assurance for Region 9 https://www.epa.gov/quality/data-validation-laboratory-quality-assurance-region-9 |

| State Guidance | |

| Alaska Department of Environmental Conservation (ADEC) Division of Spill Prevention and Response Contaminated Sites Program | Minimum Quality Assurance Requirements for Sample Handling, Reports, and Laboratory Data https://dec.alaska.gov/media/18665/quality-assurance-tech-memo-2019.pdf |

| Laboratory Data Review Checklist https://dec.alaska.gov/media/20872/laboratory-data-review-check-list.docx | |

| Laboratory Data Review Checklist for Air Samples https://dec.alaska.gov/media/22791/laboratory-data-review-check-list-air-samples.docx | |

| Connecticut Department of Energy and Environmental Protection (DEEP) | Quality Assurance and Quality Control https://portal.ct.gov/DEEP/Remediation–Site-Clean-Up/Guidance/Quality-Assurance-and-Quality-Control |

| New Jersey Department of Environmental Protection (NJDEP) Site Remediation Program | Data Quality Assessment and Data Usability Evaluation Technical Guidance https://www.nj.gov/dep/srp/guidance/srra/data_qual_assess_guidance.pdf |

| New York State Department of Conservation (NYSDEC) | DER-10: Technical Guidance for Site Investigation and Remediation—Appendix 2B Guidance for Data Deliverables and the Development of Data Usability Summary Reports https://www.dec.ny.gov/docs/remediation_hudson_pdf/der10.pdf |

| Texas Commission on Environmental Quality (TCEQ) | Review and Reporting of COC Concentration Data under Texas Risk Reduction Program (TRRP-13) https://www.tceq.texas.gov/assets/public/comm_exec/pubs/rg/rg-366-trrp-13.pdf |

5. REFERENCES AND ACRONYMS

The references cited in this fact sheet, and the other ITRC VI mitigation fact sheets, are included in one combined list that is available on the ITRC web site. The combined acronyms list is also available on the ITRC web site.